How intelligent is AI today?

I recently attended a workshop by Arts Impact AI, which is undertaking conversations on AI across Canada. I discovered quickly that my expectation of what Artificial Intelligence (AI) is, wasn’t quite in the right place for the conversation at hand. I expected the discussion to centre around intelligent machines thinking and working similar to humans. Where attributes like self-learning or the ability to intelligently change its programming based on new input would be explored.

Algorithm Making

We spent the morning considering algorithms capable of rapidly analyzing vast amounts of data. An intuitive example came in the form of a group exercise where group 1 developed an algorithm (five characteristics based on a set of 12 images of convicted criminals) to identify the most likely criminal in a crowd, group 2 – the computer – applied the algorithm and group 3 – the humans – were tasked to simply identify the criminal without an algorithm. My colleagues in group 1 – which was made up of people from diverse backgrounds and ethnicities who live on the traditional territories of self-governing First Nations in the Yukon (and yes, that might have mattered to our decision-making) – opted to select criteria that did not include racial stereotypes. Needless to say, we broke the machine.

Each group had serious struggles with the ethical implications of their group’s role. This was the point, of course: do the designers of algorithms simply reinforce the stereotypes based on a highly biased judicial system that disproportionately affects Indigenous people and people of colour, and often men that are visibly part of these groups; or do they write an algorithm that does not fall into those stereotypes but focuses on other aspects.

Big Data Analysis

In my way of thinking this kind of AI application lives in the realm of big data analysis. While I imagined AI to feel unfamiliar and new, this felt extremely familiar to me: As a market researcher, I have followed for years work on “big data” analysis and how with the aid of faster computers our ability to analyze truly vast data sets has increased many fold. The biggest advantage, indeed, being speed that cannot be matched by a single human brain.

The AI application this group exercise mirrored is based on the analysis of a vast amount of data, e.g. 10,000+ photographs of convicted criminals, using computer facial recognition. This analysis identifies statistical probabilities for the parameters that were set. Those probabilities are then used by humans to program an algorithm. That algorithm seeks to identify people in large crowds that match the analysis. By definition, this kind of analysis is looking to the past to inform the future; or in this case, to become the future.

Ethical Dilemma

The humans who build such algorithms – which itself is void of AI self-learning or the acquisition and application of new information and capacity – determine their outcome.

When these humans do not apply a greater understanding, or an ethical lens (related to systemic impacts of oppression of certain groups in society, for instance) to the parameters analyzed in the first place, or to the resulting statistical probabilities, they are bound to create algorithms that reinforce the systemic biases evident in society.

In short, they may miss a lot of criminals and identify a lot of non-criminals. In so doing, they may also ensure that more of the same groups of people are pursued with the government’s righteous rigour, resulting in higher incarceration rates for these groups. Rather than discover what is real, it perpetuates a seriously biased reality that increasingly would disadvantage specific groups. The past literally becomes the future.

AI governance as data governance

This discussion of what algorithms are today centred on big data and what we can and should do with it was fascinating. Alas, it didn’t paint a picture for me of artificial intelligence in the sci-fi sense.

In any case, as a result of this data focus, the AI governance discussion was heavy on data governance, i.e. the collection, storage and use of personal data. Personal Information Privacy and Electronic Documents Act and provincial laws govern information that is identifiable to an individual already. Canadian Anti Spam Legislation tries to combat spam and other electronic threats. There is a Do Not Call List to regulate how landlines can be used. These legislative tools tend to deal with a specific technology. This approach leaves much grey and blank space as companies explore and create more advanced technological innovations. Simply put, technology changes more rapidly than laws.

In the end I feel it is this conundrum that AI governance should address – to move away from regulating one specific technology at a time to contemplate the notion of privacy and social licence we wish to adopt in our society.

Definitions of Artificial Intelligence

Artificial intelligence (AI) is an area of computer science that emphasizes the creation of intelligent machines that work and react like humans.

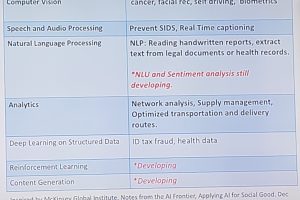

Some of the activities computers with artificial intelligence are designed for include:

- – Speech recognition

- – Learning

- – Planning

- – Problem solving

[Source: Technopedia]

4 Types of AI

- Reactive machines – e.g. Deep Blue chess playing machine

- Reactive machines have no concept of the world and therefore cannot function beyond the simple tasks for which they are programmed.

- Limited memory – e.g. autonomous vehicles

- Limited memory builds on observational data in conjunction with pre-programmed data the machines already contain

- Theory of mind – e.g. current voice assistants are an incomplete early version

- decision-making ability equal to the extent of a human mind, but by machines

- Self-awareness -so far only exists in the movies

- Self-aware AI involves machines that have human-level consciousness.

Source: G2